After Nvidia announced GeForce GTX 1660 Ti, we knew that there will most likely be a non-Ti version. That was announced on 15 of March and we already informed you of the basics. Still here there are again.

GeForce GTX 1660 is also based on the same TU116 chip and there are 3 main differences:

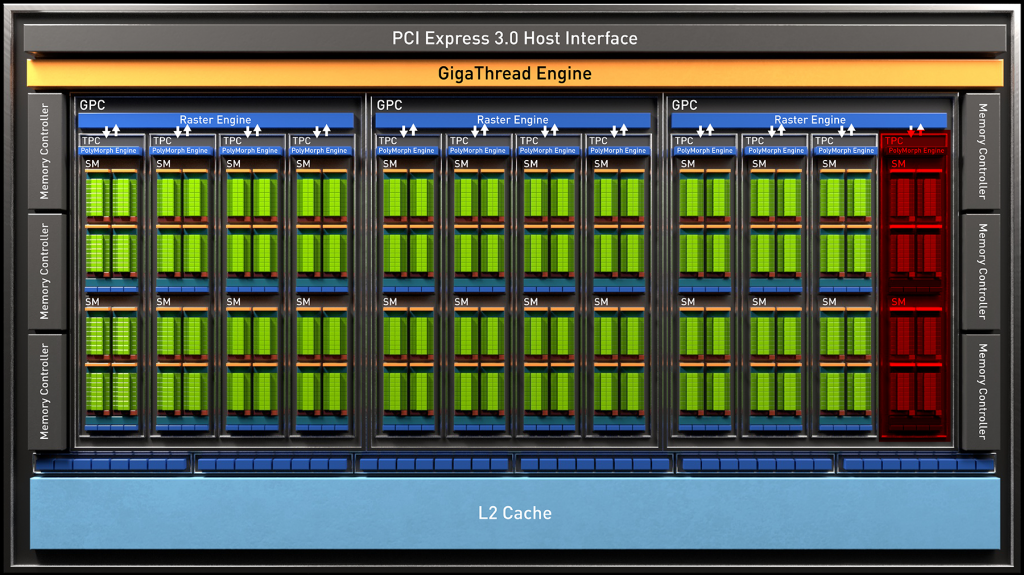

- The new model loses one of the 12 Tread processing Clusters (TPC), that is 128 CUDA Cores and 8 Texturing units, so it has 11 TPC, 1408 CUDA Cores and 88 texturing units. Or about 8% less compute power.

- The other big change is the memory type – GeForce GTX 1660Ti uses 6 GB of 12 Gigabits per second GDDR6 memory, the vanilla 1660 is equipped with 6 GB of 8 Gigabits per second GDDR5 memory. That is a massive 33 % reduction of memory bandwidth.

- And the last part is the new model has slightly higher clocks with 30 MHz higher base and 15 MHz higher boost clock speed, resulting in 1530 MHz base and 1785 MHz boost clocks. The factory overclocked models are expected to boost to 1830 MHz.

The power consumption is kept the same with a TDP rating 120 W. The base price should be $219.

For this review we managed to get a samples of both GeForce GTX 1660 and 1660 Ti in the form of Palit StormX models. On the outside the cards are basically identical to the previously reviewed Gainward GeForce GTX 1660 Ti Pegassus. That is no surprise, as both brands have a single mother company.

The cards use the same ITX form factor with a single 10 cm fan and 3 heatpipes in the cooler. Both are the OC version of the StormX. That means that the core clock was rised to 1830 MHz for GTX 1660 and to 1815 MHz for the GTX 1660 Ti. Both cards are pretty much silent at idle despite the fact that they have no zero fan mode, and are noticeable at load, but not exactly loud.

To get a fuller picture in this test, we added also MSI GeForce GTX 1060 6 GB Armor model and XFX Radeon RX 590 Fatboy, as the first is the direct predecessor to GTX 1660 and the other got recently price cut to $219 and is now in direct competition to the new Nvidia graphics adapter.

And now let’s get to testing.